Temporal Difference

Temporal Difference (TD) Control

- A class of model-free reinforcement learning methods which learn by bootstrapping from the current estimate of the value function.

- These methods sample from the environment, like Monte Carlo methods, and perform updates based on current estimates, like dynamic programming methods.

- Ref

TD vs MC Control

- TD: update Q-table after every time step

- MC: update the Q-table after complete an entire episode of interaction

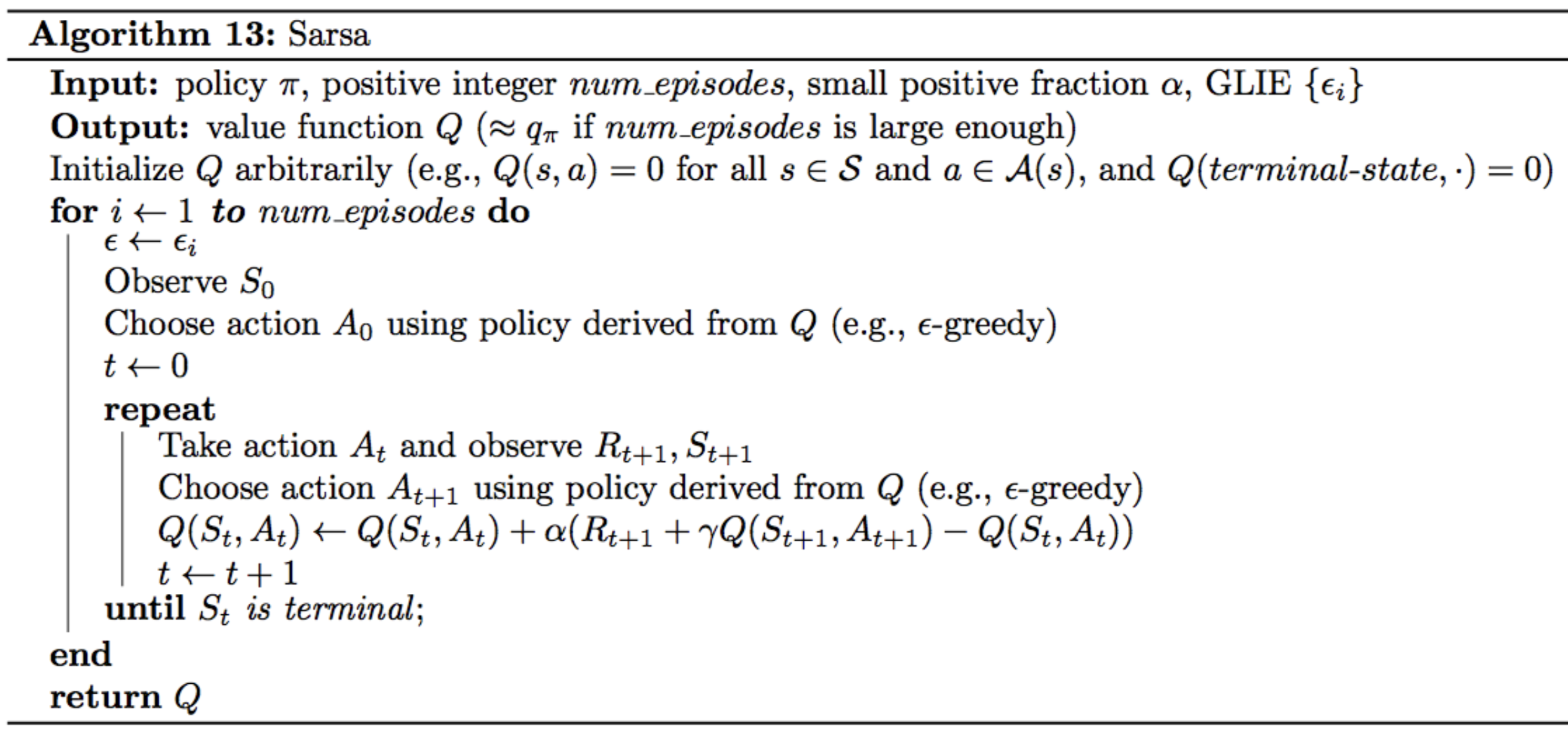

Sarsa(0) Algorithm

- Naming: State–action–reward–state–action

- In the algorithm, the number of episodes the agent collects is equal to $num$ $episodes$. For every time step $t\ge 0$, the agent:

- takes the action $A_t$ (from the current state $S_t$ that is $\epsilon$-greedy with respect to the Q-table

- receives the reward $R_{t+1}$ and next state $S_{t+1}$

- chooses the next action $A_{t+1}$ (from the next state $S_{t+1}$) that is $\epsilon$-greedy with respect to the Q-table

- uses the information in the tuple ($S_t,A_t, R_{t+1}, S_{t+1}, A_{t+1}$) tp ipdate the entry $Q(S_t, A_t)$ in the Q-table corresponding to the current state $S_t$ and the action $A_t$.

Sarsa Algorithm, image from Udacity nd893

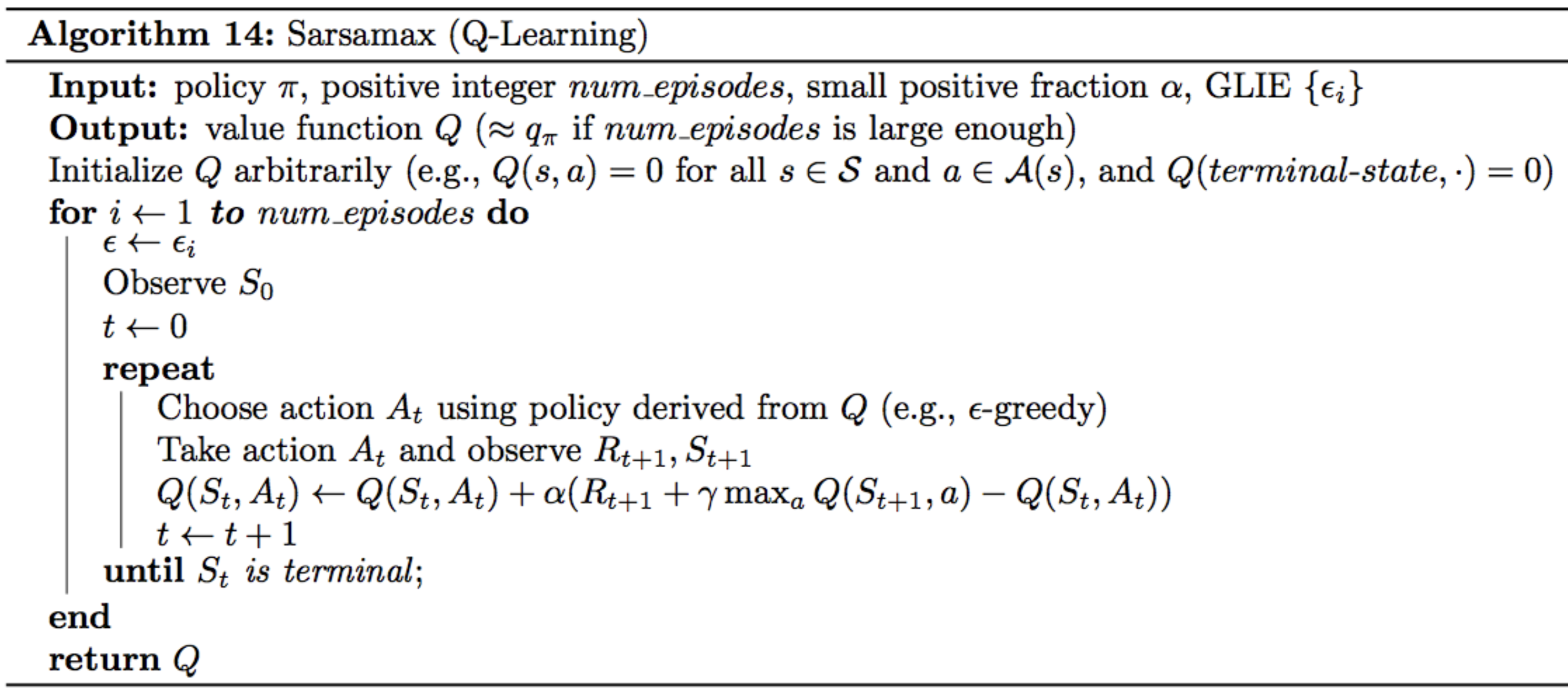

Sarsamax (Q-Learning)

Sarsamax Algorithm, image from Udacity nd893

Optimism 🔭

- For any TD control method, you must begin by initializing the values in the Q-table. It has been shown that initializing the estimates to large values can improve performance.

- If all of the possible rewards that can be received by the agent are negative, then initializing every estimate in the Q-table to zeros is a good technique.

- In this case, we refer to the initialized Q-table as optimistic, since the action-value estimates are guaranteed to be larger than the true action values.

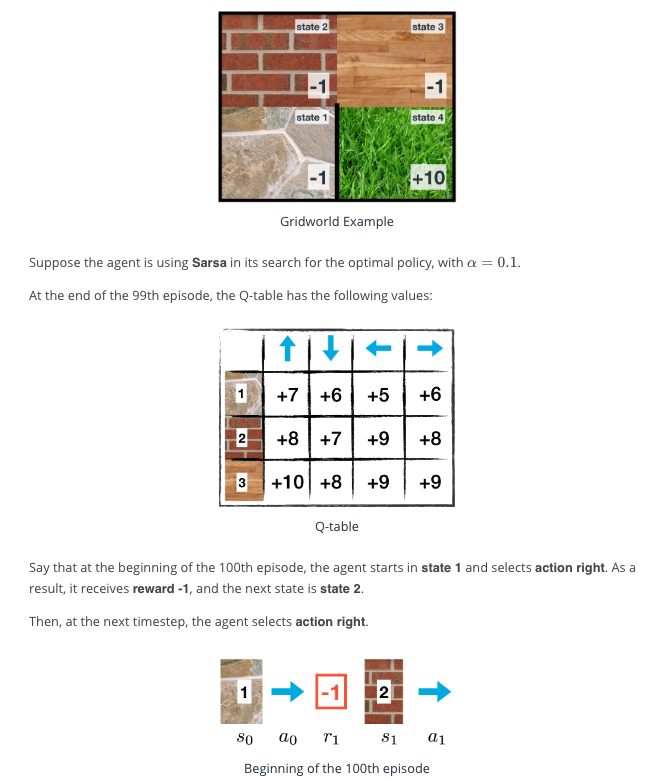

Excercise

OpenAI Gym: CliffWalkingEnv

Test

Sarsa

Sarsa Algorithm, image from Udacity nd893

Answer: 6.16