Continuous Spaces

Reinforcement Learning: Framework

-

Model-based

- requires a known transition and reward model

- essentially apply dynamic programming to iteratively compute the desired value functions and optimal policies using that model

- Examples

- Policy iteration

- Value iteration

-

Model-free

- samples the environment by carrying out exploratory actions and use the experience gained to directly estimate value functions

- Examples

- Monte Carlo Methods

- Temporal-Difference Learning

Dealing with Discrete and Continuous Spaces

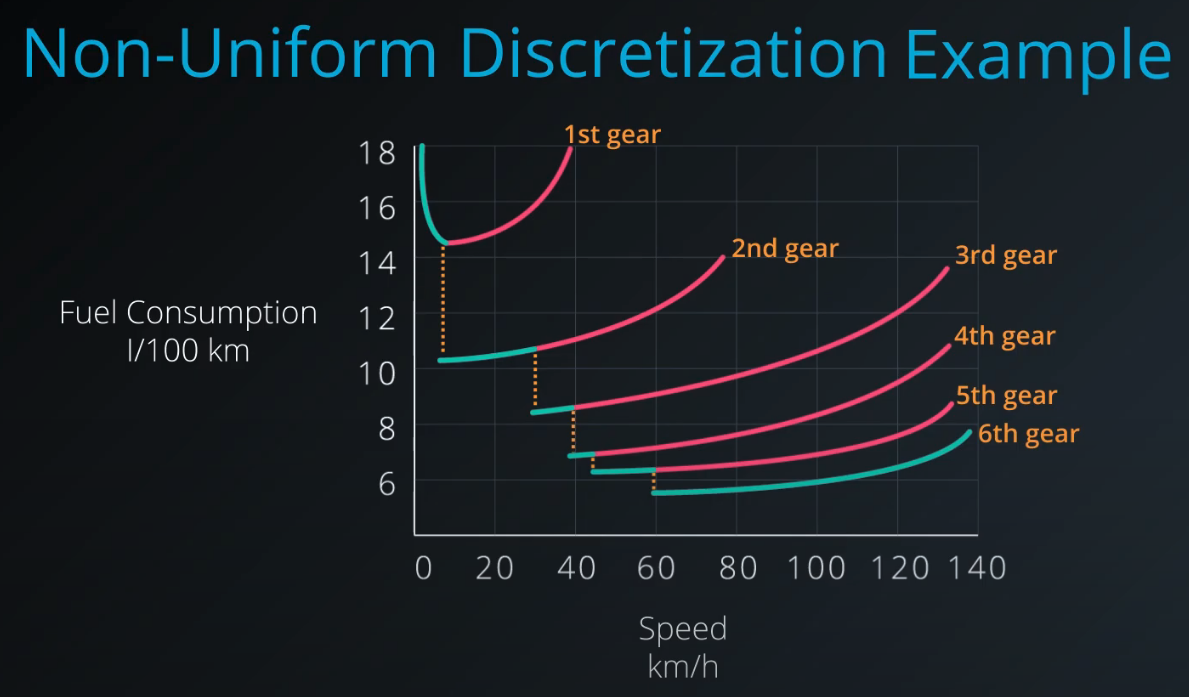

- Discretization: Converting a continuous space into a discrete space

- Function Approximation

[Non-uniform Discretization, image from Udacity 893]

OpenAI Environments

Discrete(): refers to a discrete spaceBox(): indicates a continuous space

Tile Coding

- Adaptive Tile Coding

- does not rely on a human to specify a discretization ahead of time

- the resulting space is appropriately partitioned based on its complexity

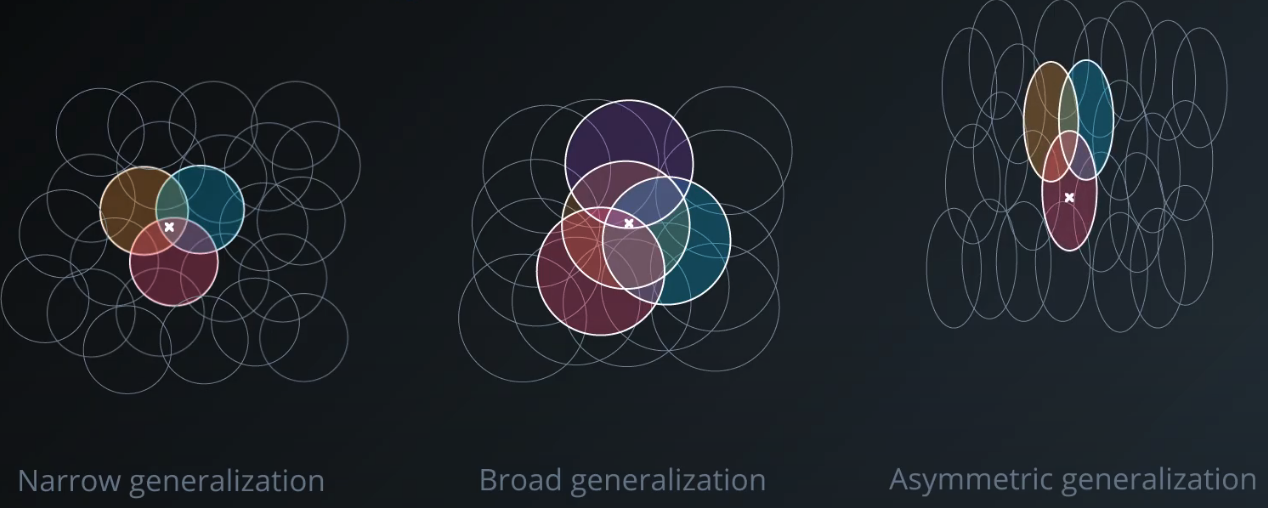

Coarse Coding

- Generalization

- Narrow generalization

- Broad generalization

- Asymmetric generalization

- Redial Basis Functions

[Coarse Coding, image from Udacity 893]

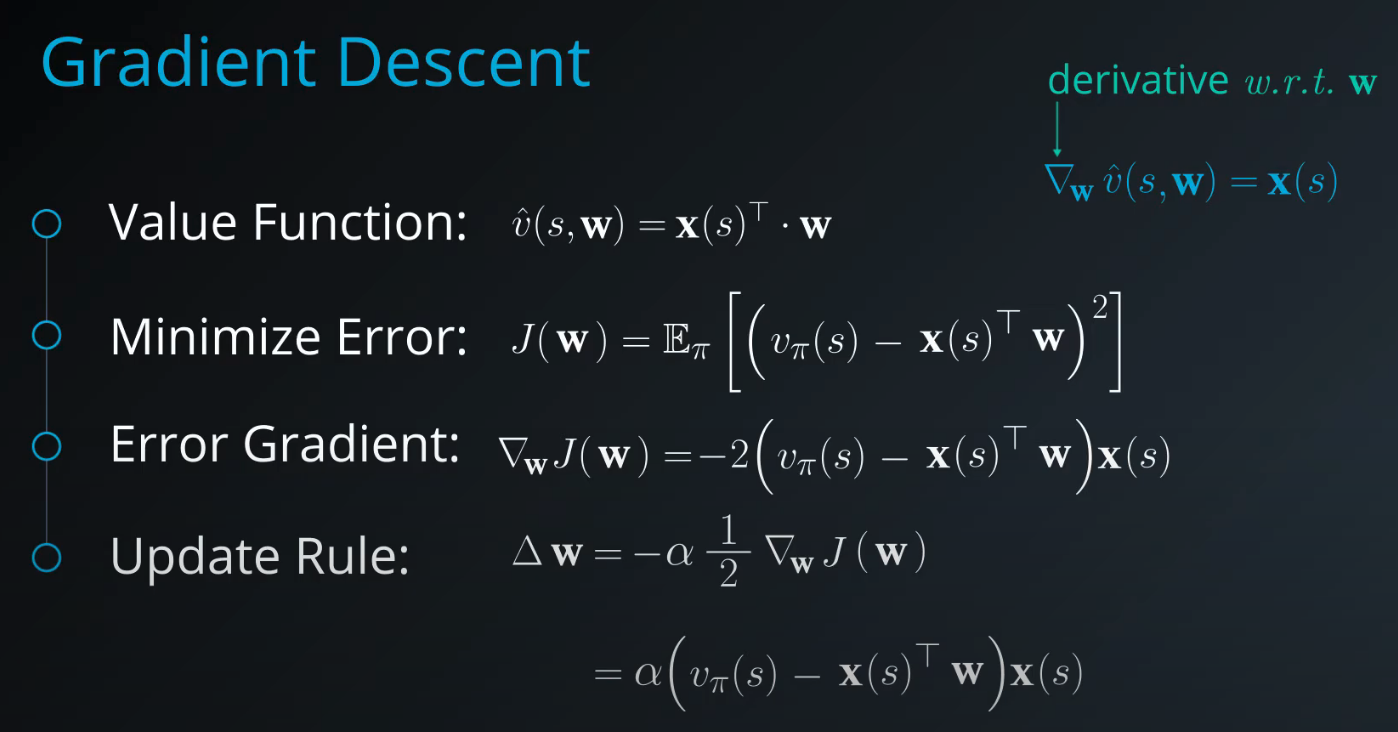

Function Approximation

-

State value approximation

- linear function approximation: $\hat{v}(s,w) = x(s)^T \cdot w$

- Goal: Minimize Error

- Use Gradient Descent: $\nabla_w \hat{v}(s, w) = x(s)$

-

Action value approximation

[Linear Function Approximation]