Packages

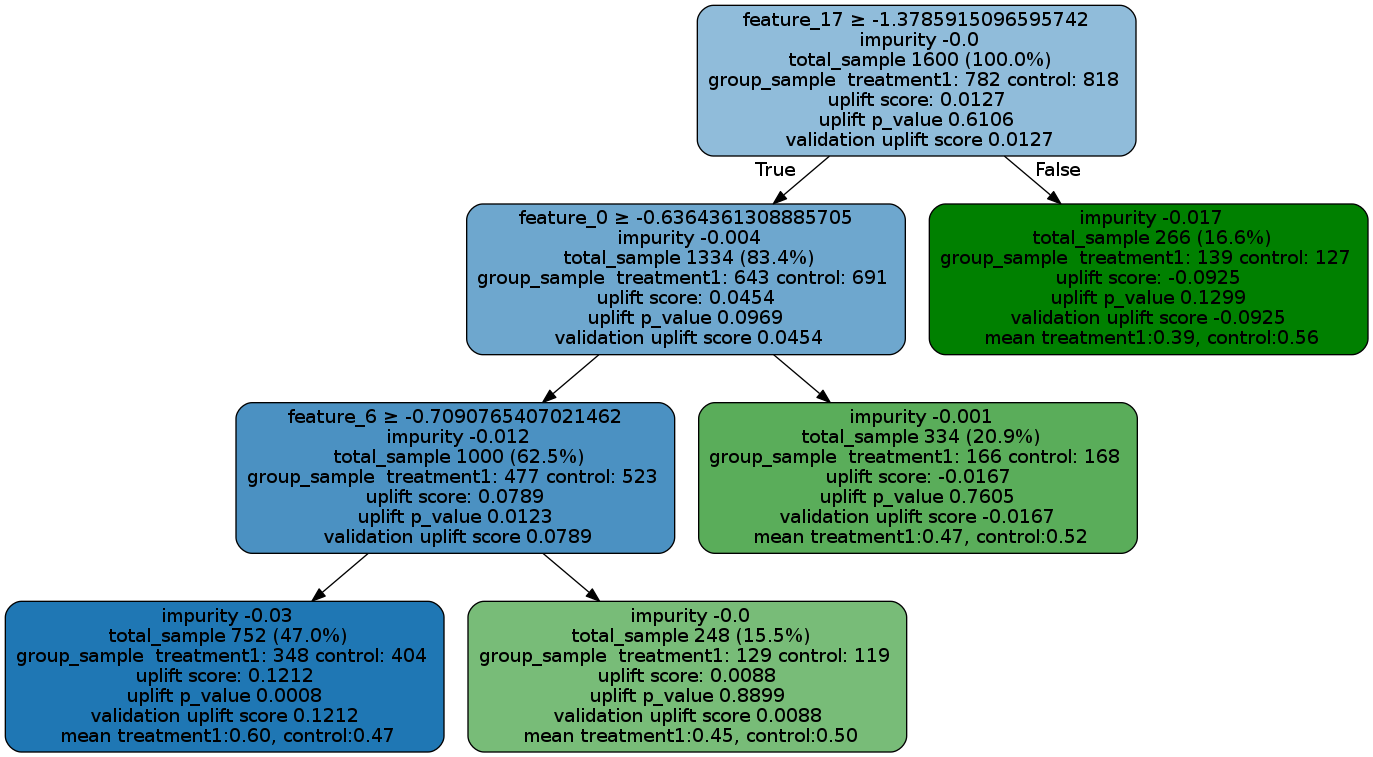

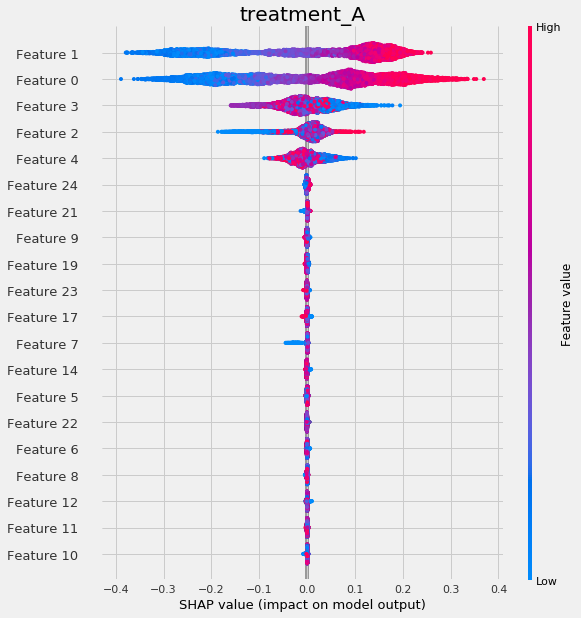

causalml

Uplift Tree Visualization using causalml

Meta Learner Feature Importances using causalml

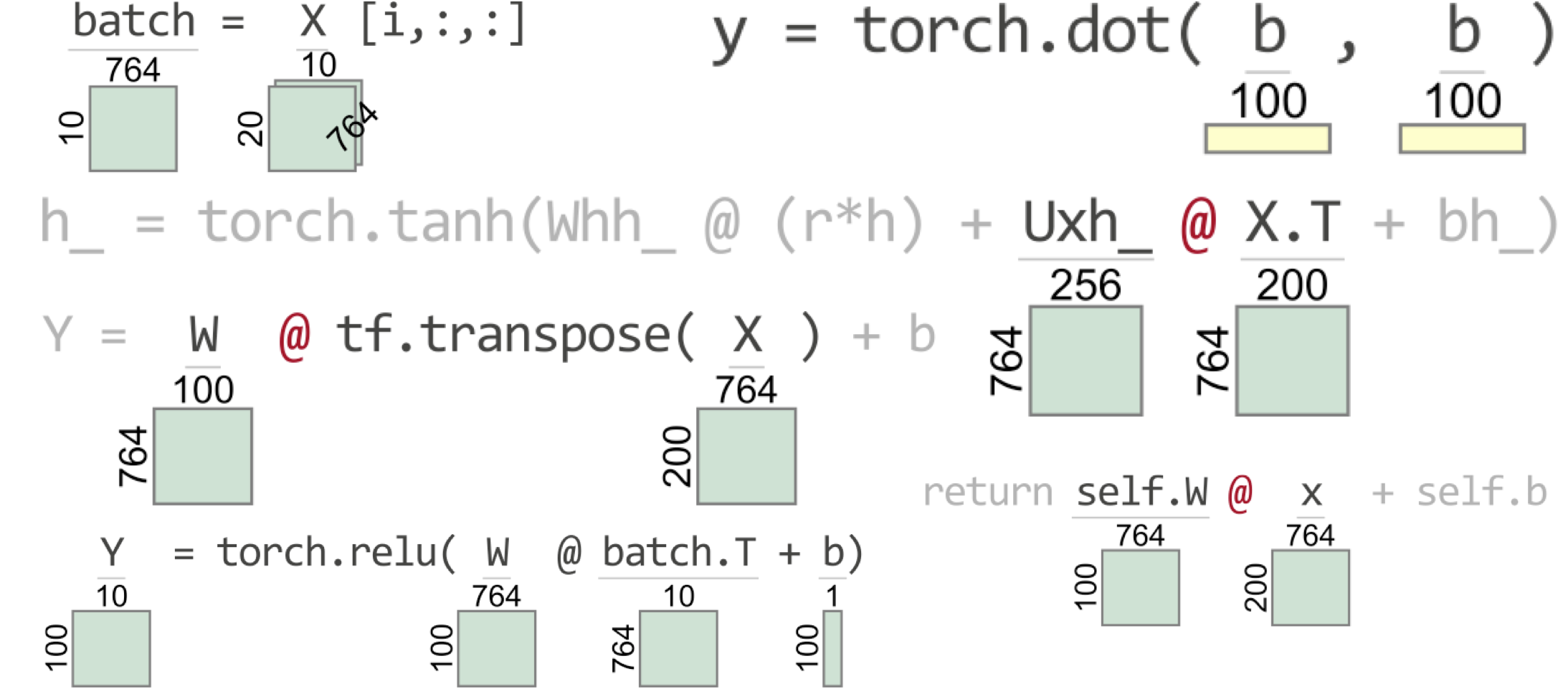

Explained.ai

- Clarifying exceptions and visualizing tensor operations in deep learning code

Image from Explained.ai

Tensor Sensor

It works with Tensorflow, PyTorch, JAX, and Numpy, as well as higher-level libraries like Keras and fastai.

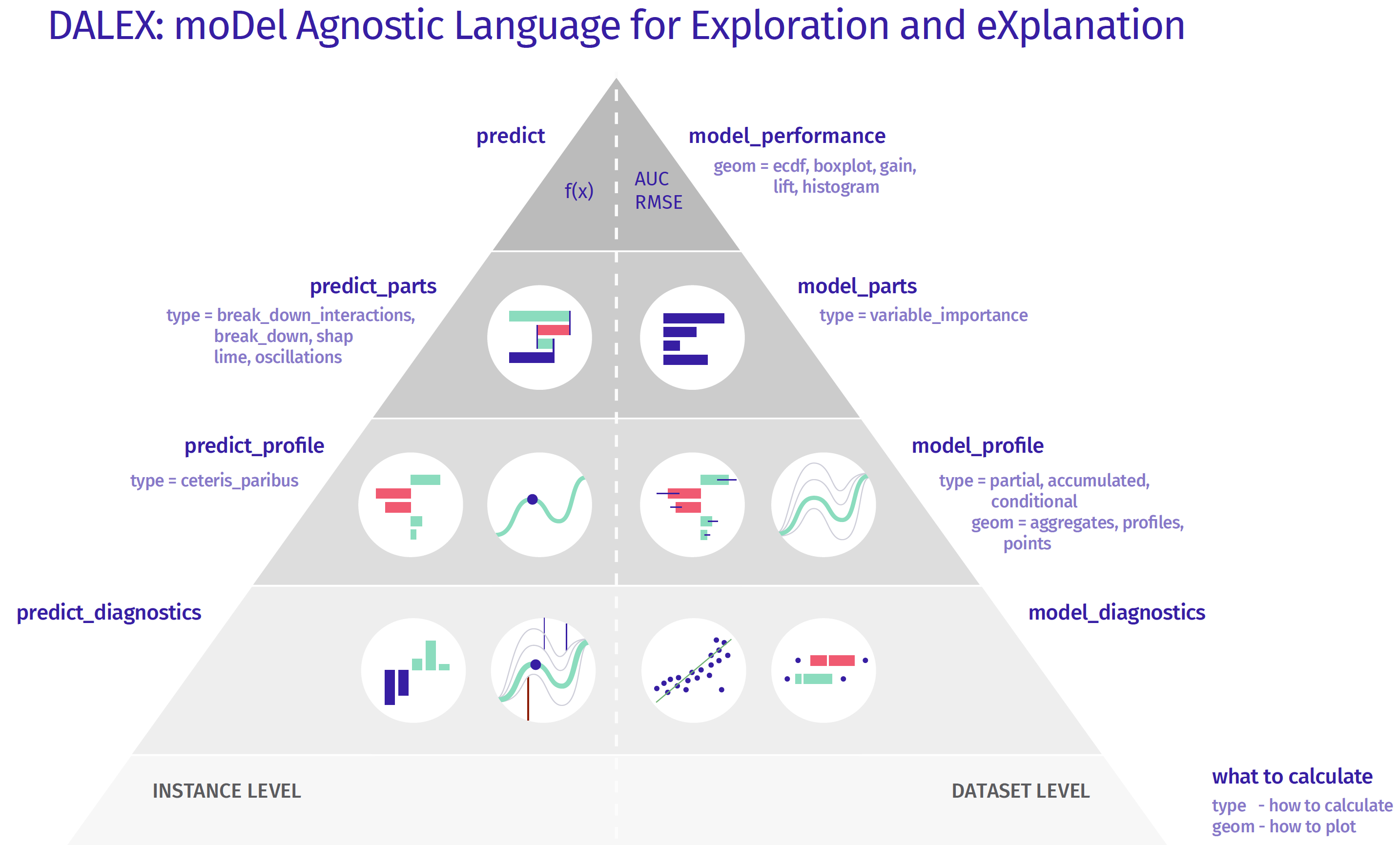

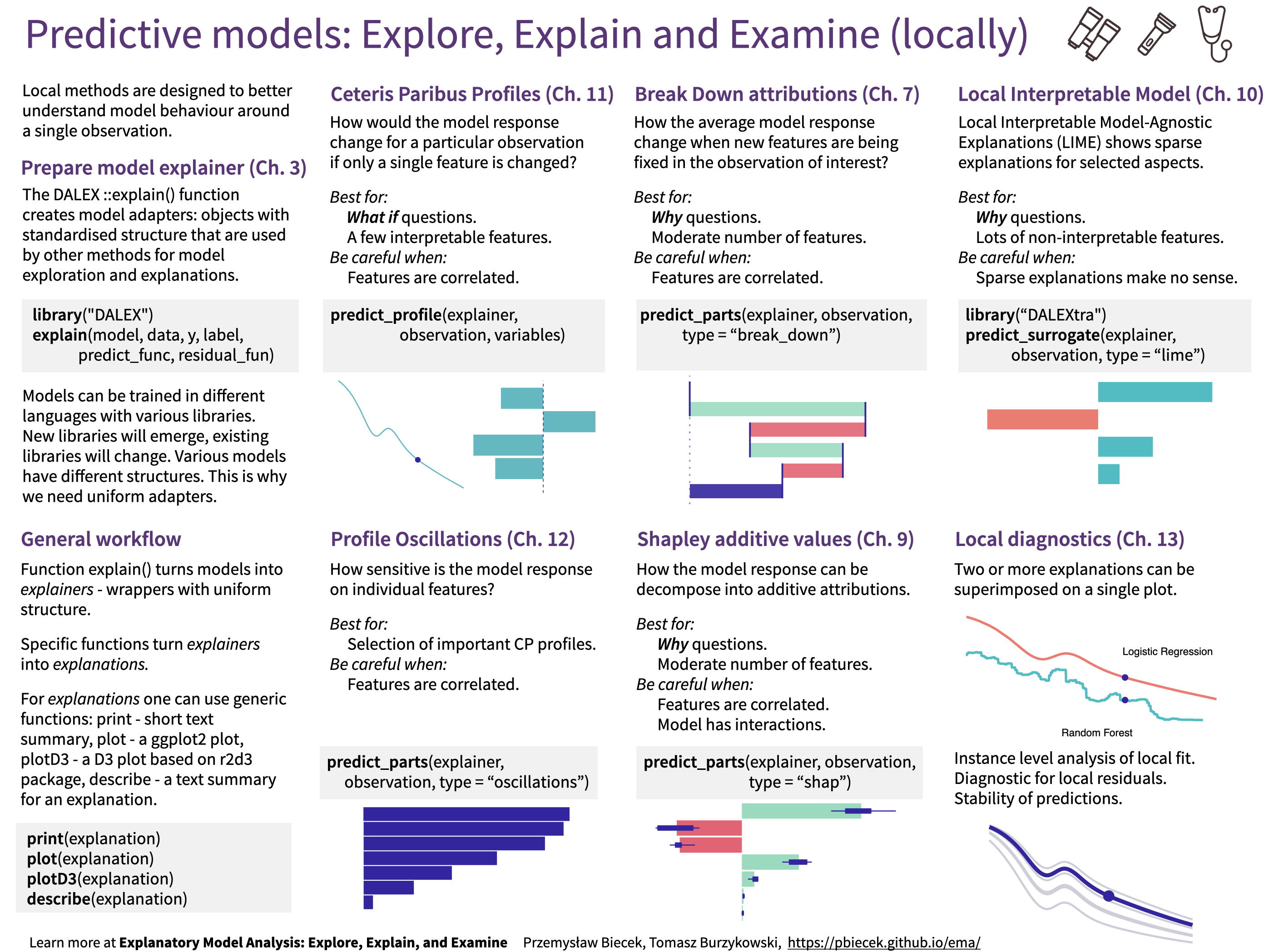

DALEX

- Gentle introduction to DALEX with examples

- Installation

pip install dalex -U

DALEXtra

- scikit-learn

- keras

- H2O

- tidymodels

- xgboost

- mlr or mlr3

DALEX functions, image from DALEX

Why

-

From DALEX It’s clear that we need to control algorithms that may affect us. Such control is in our civic rights. Here we propose three requirements that any predictive model should fulfill.

-

Prediction’s justifications

- For every prediction of a model one should be able to understand which variables affect the prediction and how strongly.

- Variable attribution to final prediction.

-

Prediction’s speculations

- For every prediction of a model one should be able to understand how the model prediction would change if input variables were changed.

- Hypothesizing about what-if scenarios.

-

Prediction’s validations

- For every prediction of a model one should be able to verify how strong are evidences that confirm this particular prediction.

There are two ways to comply with these requirements.

- One is to use only models that fulfill these conditions by design.

- White-box models like linear regression or decision trees.

- In many cases the price for transparency is lower performance.

- The other way is to use approximated explainers – techniques that find only approximated answers, but work for any black box model.

- Here we present such techniques.